News

The science behind Amazon Alexa TaskBot TWIZ: 4 publications at top NLP conferences

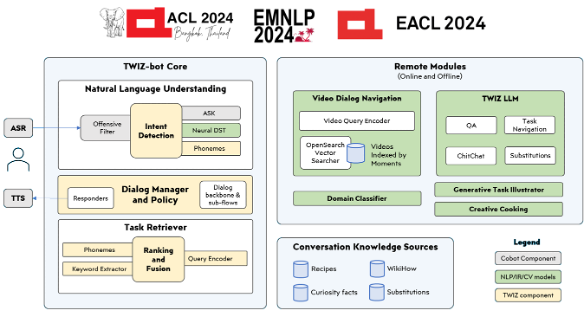

The AlexaTaskBot TWIZ team is having lots of fun publishing the research results of our journey: 4 papers at ACL2024, EMNLP2024 and EACL2024 conferences so far.

The first article describes our research towards PlanLLM@EACL2024, a model that can ground its behavior on a step-by-step plan of instructions. It was the first of such models that paved the way to the MultimodalPlanLLM@EMNLP2024 where the plan is multimodal, and the user also provides multimodal queries. The key takeaway from this research is that we can ground and guardrail the behavior of LLMs on a sequence of constraints, while supporting multimodal inputs and outputs.

Image and video generation was also an active research focus. Leading the way towards diffusion methods for input sequences, we proposed a method to illustrate sequences of step-by-step instructions. In our work at ACL20204, we demonstrated that visual and semantic coherence can be achieved with a new LLM for contextual captions and by leveraging the denoising latent space information.

Simulating real-world users was a critical challenge to ensure the robustness of the entire system as a whole. Leveraged by a capture-and-replay tool to simulate users, we researched how LLMs can emulate users and their varying traits. In mTAD@EMNLP20204, we proposed a mixture of LoRAs capturing specific language traits to dynamically inject such traits to the core language model distribution. This allows simulating a wide range of different users, thus creating an LLM that can talk to another LLM!

These are exciting times that we are living in!