News

Multimodal Systems and Google Researchers present a novel Generative AI algorithm at ACL2024

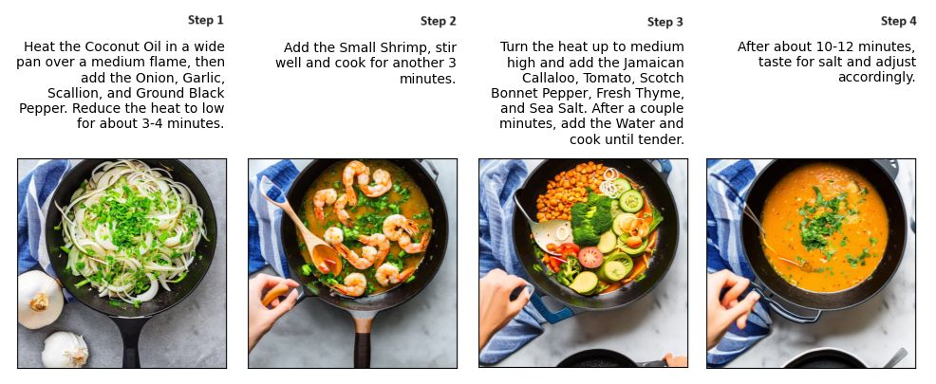

Researchers of the Multimodal Systems group and Google proposed a new Generative AI algorithm to synthesize coherent sequences of images representing manual tasks, such as DYI, cooking.

The key goal is to make Generative-AI capable of handling inputs that sequences of instructions. This is a step change in relation to the state of the art that it is only capable of handling one instruction at a time. The new idea leverages Large Language Model and Latent Diffusion Models to make the generation process aware of the full sequence. First, we transform the sequence into canonical sequence of instructions to maintain the semantic coherence of the sequence. In addition, to maintain the visual coherence of the image sequence, we introduce a copy mechanism to initialize reverse diffusion processes with a latent vector iteration from a previously generated image from a relevant step.

The results are surprising! You can see them on the project web page.

This idea builds on the growing insights that the Vision and Language community is getting from the latent space of LDM. Overall, both strategies can condition the reverse diffusion process on the sequence of instruction steps and tie the contents of the current image to previous instruction steps and corresponding images.